HOME

HW 9 Mathematics 503, Mathematical Modeling, CSUF

, July 16, 2007

Nasser M. Abbasi

October 8, 2025

Contents

1 Problem 8 page 362 section 6.3

problem:

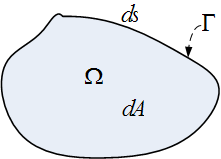

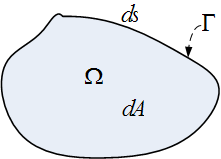

Consider the problem of minimizing the functional \(J\left ( u\right ) ={\displaystyle \int \limits _{\Omega }} L\left ( \mathbf {x},u,\nabla u\right ) d\mathbf {x}\) over all \(u\in C^{2}\left ( \Omega \right ) \) with \(u\left ( \mathbf {x}\right ) =f\left ( \mathbf {x}\right ) \) at boundary \(\Gamma \) where \(f\) is a given

function. \(\Omega \) is bounded and well behaved in \(\mathbb {R} ^{2}.\)

(a) Show that the first variation is (Where \(L\) below is meant to be \(L\left ( \mathbf {x},u,\nabla u\right ) \) ) where \(\mathbf {x}\) is the vector \(\begin {bmatrix} x_{1}\\ x_{2}\end {bmatrix} \)

\begin{align*} \delta J\left ( u,\mathbf {h}\right ) & ={\displaystyle \int \limits _{\Omega }} L_{u}h\mathbf {+}L_{\nabla u}\cdot \nabla h\mathbf {\ \ \ }dA\\ & ={\displaystyle \int \limits _{\Omega }} \left ( L_{u}\mathbf {-\nabla \cdot }L_{\nabla u}\right ) h\mathbf {\ \ }dA\mathbf {\ -}{\displaystyle \int \limits _{\Gamma }} hL_{\nabla u}\cdot \mathbf {n\ }ds \end{align*}

Where \(L_{\nabla u}\) is the vector \(\begin {bmatrix} L_{\left ( \frac {\partial u}{\partial x_{1}}\right ) }\\ L_{\left ( \frac {\partial u}{\partial x_{2}}\right ) }\end {bmatrix} =\begin {bmatrix} \frac {\partial L}{\partial \left ( \frac {\partial u}{\partial x_{1}}\right ) }\\ \frac {\partial L}{\partial \left ( \frac {\partial u}{\partial x_{2}}\right ) }\end {bmatrix} \) and \(\mathbf {h}\in C^{2}\left ( \Omega \right ) \) with \(h\left ( \mathbf {x}\right ) =\mathbf {0}\) at the boundary \(\Gamma \)

(b)Show that the necessary condition for \(u\) to minimize \(J\) is that \(u\) must satisfy the Euler equation \(L_{u}\mathbf {-\nabla \cdot }L_{\nabla u}=0\),

\(\mathbf {x}\in \Omega \)

(c)If \(u\) is not fixed on the boundary \(\Gamma \) find the natural boundary conditions.

Answer

(a)

\begin{align*} J\left ( u\right ) & ={\displaystyle \int \limits _{\Omega }} L\left ( \mathbf {x},u,\nabla u\right ) dA\\ J\left ( u+th\right ) & ={\displaystyle \int \limits _{\Omega }} L\left ( \mathbf {x},u+th,\nabla \left ( u+th\right ) \right ) dA\\ & ={\displaystyle \int \limits _{\Omega }} L\left ( \mathbf {x},u+th,\nabla u+t\nabla h\right ) \ dA \end{align*}

Hence

\begin{align*} \frac {dJ\left ( u+th\right ) }{dt} & =\frac {d}{dt}{\displaystyle \int \limits _{\Omega }} L\left ( \mathbf {x},u+th,\nabla u+t\nabla h\right ) \ d\mathbf {x}\\ & ={\displaystyle \int \limits _{\Omega }} \frac {\partial L}{\partial \left ( u+th\right ) }h+\left ( \frac {\partial L}{\partial \left ( \nabla u+t\nabla h\right ) }\cdot \nabla h\right ) \ \ d\mathbf {x}\end{align*}

But \(\delta J\left ( u,\mathbf {h}\right ) =\lim _{t\rightarrow 0}\frac {dJ\left ( u+th\right ) }{dt}\), hence at \(t=0\) the above becomes

\begin{equation} \delta J\left ( u,\mathbf {h}\right ) ={\displaystyle \int \limits _{\Omega }} \frac {\partial L}{\partial u}h+\left ( \frac {\partial L}{\partial \left ( \nabla u\right ) }\cdot \nabla h\right ) \ \ dA\tag {1}\end{equation}

But \(\nabla u=\begin {bmatrix} \frac {\partial u}{\partial x_{1}}\\ \frac {\partial u}{\partial x_{2}}\end {bmatrix} \), hence \(\frac {\partial L}{\partial \left ( \nabla u\right ) }=\begin {bmatrix} \frac {\partial L}{\partial \left ( \frac {\partial u}{\partial x_{1}}\right ) }\\ \frac {\partial L}{\partial \left ( \frac {\partial u}{\partial x_{2}}\right ) }\end {bmatrix} \), and \(\nabla h=\begin {bmatrix} \frac {\partial h}{\partial x_{1}}\\ \frac {\partial h}{\partial x_{2}}\end {bmatrix} \), therefore

\begin{align*} \frac {\partial L}{\partial \left ( \nabla u\right ) }\cdot \nabla h & =\begin {bmatrix} \frac {\partial L}{\partial \left ( \frac {\partial u}{\partial x_{1}}\right ) }\\ \frac {\partial L}{\partial \left ( \frac {\partial u}{\partial x_{2}}\right ) }\end {bmatrix} ^{T}\begin {bmatrix} \frac {\partial h}{\partial x_{1}}\\ \frac {\partial h}{\partial x_{2}}\end {bmatrix} \\ & =\frac {\partial L}{\partial \left ( \frac {\partial u}{\partial x_{1}}\right ) }\frac {\partial h}{\partial x_{1}}+\frac {\partial L}{\partial \left ( \frac {\partial u}{\partial x_{2}}\right ) }\frac {\partial h}{\partial x_{2}}\\ & =L_{u_{x_{1}}}h_{x_{1}}+L_{u_{x_{2}}}h_{x_{2}}\end{align*}

Hence (1) becomes

\begin{equation} \delta J\left ( u,\mathbf {h}\right ) ={\displaystyle \int \limits _{\Omega }} L_{u}h+\left ( L_{u_{x_{1}}}h_{x_{1}}+L_{u_{x_{2}}}h_{x_{2}}\right ) \ \ dA \tag {2}\end{equation}

Now

\[ \frac {\partial }{\partial x_{i}}\left ( L_{u_{x_{i}}}h\right ) =\frac {\partial L_{u_{x_{i}}}}{\partial x_{i}}h+L_{u_{x_{i}}}h_{x_{i}}\]

Hence

\[ L_{u_{x_{i}}}h_{x_{i}}=\frac {\partial }{\partial x_{i}}\left ( L_{u_{x_{i}}}h\right ) -\frac {\partial L_{u_{x_{i}}}}{\partial x_{i}}h \]

Hence substitute the above in (2) for \(i=1,2\) we obtain

\begin{align} \delta J\left ( u,\mathbf {h}\right ) & ={\displaystyle \int \limits _{\Omega }} L_{u}h+\left ( \frac {\partial }{\partial x_{1}}\left ( L_{u_{x_{1}}}h\right ) -\frac {\partial L_{u_{x_{1}}}}{\partial x_{1}}h+\frac {\partial }{\partial x_{2}}\left ( L_{u_{x_{2}}}h\right ) -\frac {\partial L_{u_{x_{2}}}}{\partial x_{2}}h\right ) \ \ dA\nonumber \\ & ={\displaystyle \int \limits _{\Omega }} \left ( L_{u}-\frac {\partial L_{u_{x_{1}}}}{\partial x_{1}}-\frac {\partial L_{u_{x_{2}}}}{\partial x_{2}}\right ) h\ dA+{\displaystyle \int \limits _{\Omega }} \left ( \frac {\partial }{\partial x_{1}}L_{u_{x_{1}}}+\frac {\partial }{\partial x_{2}}L_{u_{x_{2}}}\right ) h\ \ dA\tag {3}\end{align}

Now using Green theorem, where

\[{\displaystyle \int \limits _{\Omega }} \left ( \frac {\partial Q}{\partial x_{1}}-\frac {\partial P}{\partial x_{2}}\right ) dx_{1}dx_{2}=\int _{\Gamma }Pdx_{1}+Qdx_{2}\]

Let \(Q\equiv L_{u_{x_{1}}}h,P\equiv -L_{u_{x_{2}}}h\), hence Green theorem becomes

\[{\displaystyle \int \limits _{\Omega }} \left ( \frac {\partial }{\partial x_{1}}L_{u_{x_{1}}}+\frac {\partial }{\partial x_{2}}L_{u_{x_{2}}}\right ) h\ dx_{1}dx_{2}=\int _{\Gamma }\left ( -L_{u_{x_{2}}}dx_{1}+L_{u_{x_{1}}}dx_{2}\right ) h \]

Substitute the above into second term in (3) we obtain (noting that \(dA=dx_{1}dx_{2}\) since we are in

\(\mathbb {R} ^{2}\))

\begin{equation} \delta J\left ( u,\mathbf {h}\right ) ={\displaystyle \int \limits _{\Omega }} \left ( L_{u}-\frac {\partial L_{u_{x_{1}}}}{\partial x_{1}}-\frac {\partial L_{u_{x_{2}}}}{\partial x_{2}}\right ) h\ dA+\int _{\Gamma }\left ( L_{u_{x_{1}}}dx_{2}-L_{u_{x_{2}}}dx_{1}\right ) h\tag {4}\end{equation}

But the second integral above can be rewritten as (by dividing and multiplying by \(ds\))

\[ \int _{\Gamma }\left ( L_{u_{x_{1}}}dx_{2}-L_{u_{x_{2}}}dx_{1}\right ) h\equiv \int _{\Gamma }\left ( L_{u_{x_{1}}}\frac {dx_{2}}{ds}-L_{u_{x_{2}}}\frac {dx_{1}}{ds}\right ) h\ \ ds \]

Hence (4) becomes

\begin{equation} \delta J\left ( u,\mathbf {h}\right ) ={\displaystyle \int \limits _{\Omega }} \left ( L_{u}-\frac {\partial L_{u_{x_{1}}}}{\partial x_{1}}-\frac {\partial L_{u_{x_{2}}}}{\partial x_{2}}\right ) h\ dA+\int _{\Gamma }\left ( L_{u_{x_{1}}}\frac {dx_{2}}{ds}-L_{u_{x_{2}}}\frac {dx_{1}}{ds}\right ) h\ \ ds\tag {5}\end{equation}

Now Tangent vector at the boundary at point \((x_{1},x_{2})\) is given by vector \(\left ( \frac {dx_{1}}{ds},\frac {dx_{2}}{ds}\right ) ^{T}\), hence the normal is \(\mathbf {n}=\left ( \frac {dx_{2}}{ds},-\frac {dx_{1}}{ds}\right ) ^{T}\) (since if we

take dot product of these 2 vectors we get zero). Now we can rewrite the integrand in the second

integral in (5) in terms of this normal vector since

\begin{align*} L_{u_{x_{1}}}\frac {dx_{2}}{ds}-L_{u_{x_{2}}}\frac {dx_{1}}{ds} & =\begin {bmatrix} L_{u_{x_{1}}}\\ L_{u_{x_{2}}}\end {bmatrix} ^{T}\begin {bmatrix} \frac {dx_{2}}{ds}\\ -\frac {dx_{1}}{ds}\end {bmatrix} \\ & =\begin {bmatrix} L_{u_{x_{1}}}\\ L_{u_{x_{2}}}\end {bmatrix} \cdot \mathbf {n}\\ & =L_{\nabla u}\cdot \mathbf {n}\end{align*}

Substitute the above into the second term of (5) we obtain

\[ \fbox {$\delta J\left ( u,\mathbf {h}\right ) ={\displaystyle \int \limits _{\Omega }} \left ( L_{u}-\frac {\partial L_{u_{x_{1}}}}{\partial x_{1}}-\frac {\partial L_{u_{x_{2}}}}{\partial x_{2}}\right ) h\ dA+\int _{\Gamma }h\left ( L_{\nabla u}\cdot \mathbf {n}\right ) \ \ ds$}\]

Final note on the sign before the second integral above. The book shows it as \("-"\). I think this is

because the normal should be pointing outside? Hence if we make out normal the negative of the

normal used here (which I think points inwards), we obtain the result we are asked to

show for part (a). (notice, the book has a mistake/typo, it says \(\int _{\Gamma }h\left ( L_{\nabla u}\cdot \mathbf {n}\right ) \ \ dA\) instead of \(\int _{\Gamma }h\left ( L_{\nabla u}\cdot \mathbf {n}\right ) \ \ ds\), i.e. the

integration is over a line segment, not over a differential area (since obviously this is contour

integration).

part (b)

Necessary condition for minimum is that \(\delta J\left ( u,\mathbf {h}\right ) =0\),. ie.

\[{\displaystyle \int \limits _{\Omega }} \left ( L_{u}-\frac {\partial L_{u_{x_{1}}}}{\partial x_{1}}-\frac {\partial L_{u_{x_{2}}}}{\partial x_{2}}\right ) h\ dA-\int _{\Gamma }h\left ( L_{\nabla u}\cdot \mathbf {n}\right ) \ \ ds=0 \]

Now consider the second integral in the above. Since \(h=0\) on \(\Gamma \), hence we are left to show

that

\[{\displaystyle \int \limits _{\Omega }} \left ( L_{u}-\frac {\partial L_{u_{x_{1}}}}{\partial x_{1}}-\frac {\partial L_{u_{x_{2}}}}{\partial x_{2}}\right ) h\ dA=0 \]

But \(h\) is arbitrary function, hence by lemma 3.13 again, we argue that for the above to be zero,

then

\begin{align*} L_{u}-\frac {\partial L_{u_{x_{1}}}}{\partial x_{1}}-\frac {\partial L_{u_{x_{2}}}}{\partial x_{2}} & =0\\ L_{u}-\nabla \cdot L_{\nabla u} & =0\ \ \ \ \ \ \ \ \ on\ \mathbf {x}\in \Omega \end{align*}

Which is Euler-Lagrange equation.

Part (c)

Here we have free boundary conditions. Hence we can not take \(h=0\) everywhere on \(\Gamma \). Starting with the

first variation

\[ \delta J\left ( u,\mathbf {h}\right ) ={\displaystyle \int \limits _{\Omega }} \left ( L_{u}-\frac {\partial L_{u_{x_{1}}}}{\partial x_{1}}-\frac {\partial L_{u_{x_{2}}}}{\partial x_{2}}\right ) h\ dA-\int _{\Gamma }h\left ( L_{\nabla u}\cdot \mathbf {n}\right ) \ \ ds=0 \]

Since \(h\neq 0\) on \(\Gamma \,\ \)then by lemma 3.13 we can argue that \(L_{\nabla u}\cdot \mathbf {n=0}\) on \(\Gamma \)

Hence on \(\mathbb {R} ^{2}\), this means \(\begin {bmatrix} L_{u_{x_{1}}}\\ L_{u_{x_{2}}}\end {bmatrix} ^{T}\begin {bmatrix} \frac {dx_{2}}{ds}\\ -\frac {dx_{1}}{ds}\end {bmatrix} =0\), i.e.

\[ L_{u_{x_{1}}}\frac {dx_{2}}{ds}-L_{u_{x_{2}}}\frac {dx_{1}}{ds}=0 \]

Now we need to know the shape of the boundary to evaluate the above at each point. For example,

for a circle, \(\mathbf {n}=\begin {bmatrix} x_{1}\\ x_{2}\end {bmatrix} \) and the above become

\[ L_{u_{x_{1}}}x_{1}-L_{u_{x_{2}}}x_{2}=0 \]

And the above equation needs to be satisfied at each point on the boundary after discretization.